Flawed Science

June 3, 2019

I worked as a

corporate scientist, but most of the time my

colleagues and I spent little time doing actual

science. One glance at my

calendar in the morning would indicate what percentage of the day I might actually wear a

lab coat; and, some days, that percentage would be zero. Interfering with my science were such tasks as generating

purchase requisitions, participating in

conference calls, doing

safety audits, and sitting through mandated

corporate training on topics such as

government regulations and

corporate policies.

My

academic counterparts faced similar non-science demands on their time, such as

teaching. While I had the luxury of actually working in a

laboratory,

designing and conducting

experiments and

analyzing the data, the most successful academics never work in a laboratory. Instead, they have a huge crew of

graduate students and

postdoctoral associates doing the hands-on work while they spend their own time scrambling for

funding. The quest for funding involves writing

research proposals and attending

conferences at which they can "sell" their work. Their science is limited to the important task of

idea generation.

While I was privy to every detail of my experiments and all sources of

error,

managers of academic research laboratories need to trust their staff to always do the right thing and not

fudge the data; or, worse still,

pull numbers from thin air. Likewise, the people doing the experiments need to ensure that their data aren't being twisted by their superiors to conform to a particular

hypothesis. There are many examples of both of these types of

scientific misconduct, the motivation is

career advancement in the first case, and

fodder for funding in the second.

Humans have a great facility for

fooling themselves, and sometimes errors arise, not from malice, but from

self-delusion. In science, this is called

experimenter bias, a famous example being the

discovery of

N-rays. Shortly after

Wilhelm Röntgen's discovery of

X-rays in 1895,

French physicist,

Prosper-René Blondlot, discovered N-rays in 1903. Blondlot was a respected scientist, so there was no initial reason to doubt this discovery. However,

reproducibility is the

hallmark of science, and some scientists could not confirm Blondlot's discovery, while others did.

Blondlot discovered N-rays in much the same way that other scientific discoveries were made,

accidentally. He was doing experiments on X-rays, he found something unusual, and he even had

photographic evidence. Finally, in an attempt at resolution of this scientific

controversy, the

scientific journal,

Nature, enlisted the aid of

American physicist,

Robert W. Wood (1868-1955), to view these rays in Blondlot's own laboratory. Wood had failed in his detection of N-rays in his own laboratory.

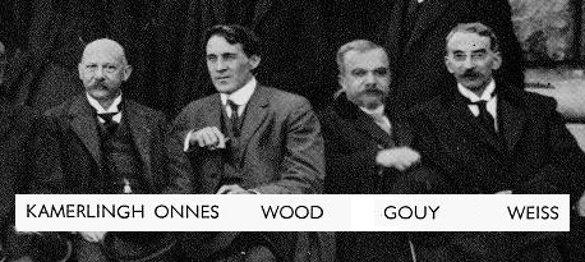

Heike Kamerlingh Onnes (1853-1926), Robert W. Wood (1868-1955), Louis Georges Gouy (1854-1926), and Pierre Weiss (1865-1940) at the Second Solay Conference on Physics, Brussels, 1913. This conference, chaired by Hendrik Lorentz (1853-1928), was entitled La structure de la matière (The structure of matter). Lorentz shared the 1902 Nobel Prize in Physics with Pieter Zeeman for his explanation of the Zeeman effect, but he's best known for the Lorentz transformation in special relativity. (Portion of a Wikimedia Commons image.)

Blondlot, who firmly believed in his discovery, was happy to have Wood confirm the existence of N-rays.

Optics laboratories are dark most of the time, and in that darkness Wood removed by

stealth an essential

prism from the N-ray

apparatus. Blondlot's people still observed N-rays. Wood's 1904 report in Nature on his "visit to one of the laboratories in which the apparently peculiar conditions necessary for the manifestation of this most elusive form of radiation appear to exist" concluded that N-rays were a purely

subjective phenomenon.[1] After that report, belief in N-rays diminished sharply, but the

true believers persisted for years thereafter. A more recent example of this might be

cold fusion.

Often, whether intentional or not, results with limited

statistical validity are published. As I wrote in an

earlier article (Hacking the p-Value, May 4, 2015),

Fisher's null hypothesis significance test is the commonly used statistical

model for analysis of experimental

data. The

null hypothesis is the hypothesis that your experimental

variable has no affect on the observed outcome of your experiment. What's computed is the

probability,

p, that the anticipated effect might still be observed even if the null hypothesis is true, and very small

p-values, such as 0.05, indicate that the null hypothesis is unlikely and that that the experimental variable does affect the observed outcome.

Physicists strive for a high standard of statistical

certainty that involves very large values of the

standard deviation. The evidence for the existence of the

Higgs boson was confirmed at the 4.9-

sigma level, meaning that there's only a one-in-a-million chance that the Higgs wasn't really detected. The objects of other

scientific disciplines, such as psychology, have less certainty, so a p-value of 0.05 or less has been their usual standard. This means that these studies have one chance in twenty of being wrong.

The journal,

Basic and Applied Social Psychology, decided in 2014 that the p-test was a weak statistical test and an "important obstacle to

creative thinking."[2] Worse yet, a 2015 article in

PLoS Biology concludes that scientists will attempt to increase chances of their paper's

publication by

tweaking experiments and

analysis methods to obtain a better p-value.[3-4] The authors of the PLoS Biology paper call this technique, "p-hacking," and it appears to be common in the

life sciences. Such p-hacking can have serious consequences, as when the effectiveness of a

pharmaceutical drug appears to be more effective than it really is.[4]

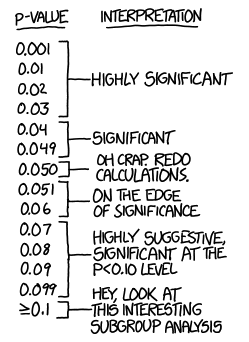

Randall Munroe weighed in on the p-value debate in his xkcd comic of January 26, 2015.

(Licensed under the Creative Commons Attribution-NonCommercial 2.5 License. Click image to view the comic on his web site.)

A more recent article on reproducibility in science has been published in Nature by Dorothy Bishop, an

experimental psychologist at the

University of Oxford (Oxford, UK).[5] Bishop cheers the recent efforts to make science more robust by reigning-in such excesses as p-hacking, and she thinks that in two

decades we will "marvel at how much time and money has been wasted on flawed research."[5]

Although the

history of science contains some guidance on the matter, scientists are generally taught how science is conducted through the example of their

professors,

advisers, and

mentors. The ideal case is that we formulate hypotheses and then test them in properly designed experiments. There will be errors in the data collecting, but proper statistical techniques will give us confidence in our results. We welcome the effort taken by others to replicate our experiments, but we realize that replication is usually done only when our results are interesting enough to be used as a starting point for other research.

Bishop writes that too many scientists ride with "the

four horsemen of the reproducibility

apocalypse;" namely, publication

bias, low statistical power, p-value hacking and

HARKing.[5] The term, HARKing, was defined in a 1998 paper by

Norbert L. Kerr, a psychologist at

Michigan State University, as "hypothesizing after results are known."[6] In most cases being guided to do new experiments by trends suggested by your data is

innocent enough. The problem, however, is when you

mine the data to create some hypothesis that passes the p-value test.

Les Quatres Cavaliers (The Four Horsemen), a 1086 parchment illumination by Martinus from the Burgo de Osma Cathedral archives. (Reformatted Wikimedia Commons image. Click for larger image.)

There are two problems with null hypothesis testing and the resulting p-score. The first, known to all researchers, is that when the experimental data don't fit the hypothesis, not only is the hypothesis rejected, but likewise any chance of publication. That's because researchers aren't motivated to write up a study that shows no effect, and journal

editors are unlikely to accept such a paper for publication. When the study is p-hacked, acceptance of a paper is less of a problem. The p-score is problematic for the other reason that it's not a very powerful statistical treatment of data.

This publication bias means that when multiple studies of a drug's effectiveness show no results, but there's one that shows a benefit, it's that study that's published, so there's a distorted view of the drug's

efficacy.[5] Fortunately, some journals are adopting the "registered report" format, in which the experimental question and study design are registered before experiment and data collection, and this method stops publication bias, p-hacking and HARKing.[5] Also, journals and

funding agencies are requiring that methods, experimental data and analysis programs are openly available.[5]

References:

- R. W. Wood, "The n-Rays," Nature, vol. 70, no. 1822 (September 29, 1904), pp. 530-531, https://doi.org/10.1038/070530a0.

- David Trafimowa and Michael Marksa, "Editorial: Publishing models and article dates explained," Basic and Applied Social Psychology, vol. 37, no. 1 (February 12, 2015), pp. 1-2, DOI: 10.1080/01973533.2015.1012991.

- Megan L. Head, Luke Holman, Rob Lanfear, Andrew T. Kahn, and Michael D. Jennions, "The Extent and Consequences of P-Hacking in Science," PLOS Biology, vol. 13, no. 3 (March 13, 2015), DOI: 10.1371/journal.pbio.1002106. This is an open access paper with a PDF file available here.

- Scientists unknowingly tweak experiments, Australian National University Press Release, March 18, 2015.

- Dorothy Bishop, "Rein in the four horsemen of irreproducibility," Nature, vol. 568, (April 24, 2019), p.435, doi: 10.1038/d41586-019-01307-2.

- Norbert L. Kerr, "HARKing: Hypothesizing After the Results are Known," Personality and Social Psychology Review, vol. 2, no. 3 (August 1, 1998), pp. 196-217, https://doi.org/10.1207/s15327957pspr0203_4. Available as a PDF file here.

Linked Keywords: Research and development; corporate scientist; colleague; science; calendar; lab coat; purchase requisition; conference call; safety; quality audit; training and development; corporate training; regulatory agency; government regulation; business ethics; corporate policy; academia; academic; education; teaching; laboratory; design of experiments; experiments; data analysis; postgraduate education; graduate student; postdoctoral research; postdoctoral associate; funding of science; research proposal; academic conference; creativity; idea generation; error; management; manager; fudge factor; fudging the data; pulling numbers from thin air; hypothesis; scientific misconduct; career; fodder; human; delusion; fooling themselves; self-delusion; observer-expectancy effect; experimenter bias; scientific discovery; N-rays; Wilhelm Röntgen; X-rays; France; French; physicist; Prosper-René Blondlot; reproducibility; hallmark; serendipity; accident; photograph; photographic; evidence; controversy; scientific journal; Nature; American; Robert W. Wood (1868-1955; Heike Kamerlingh Onnes (1853-1926); Louis Georges Gouy (1854-1926); Pierre Weiss (1865-1940); Second Solay Conference on Physics; chairman; Hendrik Lorentz (1853-1928); structure; matter; Nobel Prize in Physics; Pieter Zeeman; Zeeman effect; Lorentz transformation; special relativity; Wikimedia Commons; optics; sleight of hand; stealth; prism (optics); apparatus; subjectivity; subjective; phenomenon; belief; true believer; cold fusion; statistics; statistical; Fisher's null hypothesis significance test; mathematical model; data; null hypothesis; variable; probability; p-value; physicist; certainty; standard deviation; Higgs boson; sigma; science; scientific; discipline (academia); Basic and Applied Social Psychology; creativity; creative thinking; PLoS Biology; scientific literature; publication; tweaking; analysis method; life sciences; pharmaceutical drug; xkcd cartoon 1478; Randall Munroe; xkcd comic; Creative Commons Attribution-NonCommercial 2.5 License; Dorothy Bishop; experimental psychology; experimental psychologist; University of Oxford (Oxford, UK); decade; history of science; professor; adviser; mentor; Four Horsemen of the Apocalypse; apocalypse; bias; Norbert L. Kerr; Michigan State University; innocence; innocent; mining; mine; parchment; illuminated manuscript; illumination; Burgo de Osma Cathedral; archive; journal editor; efficacy; funding agency.